The Inevitable Dead End of Pluralist Science

Clearly, there are, indeed, some real situations in which physical reasons for a random-like outcome are also valid. But, both kinds came to be seen as the same – when they are most certainly NOT! Indeed the two get swapped around completely, as with The Copenhagen Interpretation of Quantum Theory, randomness is promoted from being a mere consequence of multiple opposing causes, to itself becoming the cause!

Thus, as the preparation for this may be a massive undertaking, I feel it necessary to prepare for all the usual tricks. And, as I have learned from similar situations in the past, I prepare by consciously laying out my current stance, so that I will be adequately prepared to register precisely where the two approaches begin to diverge significantly. In this preparation, we must, of course, start where the two approaches actually coincide – where physical reasons result in a situation best seen as completely random. And then, thereafter, trace out the diverging paths (and consequent assumptions) from that mutually agreed situation.

We must also, of course, make clear the differing assumptions and principles of the two approaches. They are categorised by the very different principles of Holism and Plurality, which turn out to be the grounds for the two alternative approaches.

Holism sees Reality as one composed of multiple, mutually-affecting factors; while Plurality sees such factors as separate and eternal Laws.

The distinctions turn out to cause the two alternatives to lead off in entirely different directions, because there is no doubt that such complex situations have contributions from all involved factors. All phenomena, in one way or another, are results of many different factors acting simultaneously.

The crux of the problem is whether that joint causality is merely a SUM of independent, unchanging factors, OR the result of all the acting factors being changed by presence of all the others, and delivering a result, which then cannot be explained in the same way at all.

For, the pluralist approach, where phenomena are mere complications of unchanging natural laws, assumes that explanations can be arrived at by extracting each of those involved, one-at-a-time, until all the laws involved have been identified.

Whereas, from the Holist stance, that conclusion is seen as a pragmatic method of deriving simplified and idealised “laws” via experiment, while, in contrast, Holism would have, somehow, to see how all the various factors involved are modified by their context – that is all the other factors, and the same will be true for each and every one of the rest.

Now, the pluralist view does approximate reality very well in carefully isolated, filtered and controlled situations, so, needless-to-say, that approach soon came to dominate our way of doing things. But it could never cope with certain results, the most significant being those occurring in qualitative developments, where wholly new features emerge, and most dramatically in what are termed Emergences (or in another parlance – Revolutions).

Plurality became the norm, for it could be made to be both predictable and very productive, as long as it occurred in stable situations (in both naturally stable situations, if available, or in purpose-built man-made stabilities).

Now, this coloured the whole level of discoveries arrived at with such a stance and method, and also unavoidably caused multiple areas of study to be separated out from one another, simply because causal connections between them were unavailable.

Reality-as-is was literally constantly being sub-divided into many specialisms, or even disciplines, which could not be made to cohere in any simple approach. And, as the required “missing-links” just proliferated, the gulfs between these separate, created areas of study grew ever larger – even though the belief was that, in time, these inexplicable gaps would finally be filled with explanations of the same type as within the known and explained areas, but not, as yet, discovered.

It amounted to cases of investigators “painting themselves into corners”, by following their primary principle – Plurality.

Now, such a trajectory was historically unavoidable, because the gains of such pluralistic science within their defined and maintained contexts were so successful and employable in effective use.

Naturally and pragmatically, Mankind moved as swiftly as possible, where they could, and the flowering of this effective, pluralistic stance was, of course, Technology. But, such approaches could never address the really big questions. And, of course, both understanding and development were replaced by increasing complexity and specialisation.

It amounted to cases of investigators “painting themselves into corners”, by following their primary principle – Plurality.

Now, such a trajectory was historically unavoidable, because the gains of such pluralistic science within their defined and maintained contexts were so successful and employable in effective use.

Naturally and pragmatically, Mankind moved as swiftly as possible, where they could, and the flowering of this effective, pluralistic stance was, of course, Technology. But, such approaches could never address the really big questions. And, of course, both understanding and development were replaced by increasing complexity and specialisation.

So, quite clearly, such a mechanistic conception could never cope with the emergence of the entirely new, which were always described as particularly involved complexities, yet never explained as such.

Now, to return to the supposed “common ground”, this was interpreted as a complex, multi-factor situation, in which opposing contributions tended to "cancel out”, and the result was a situation best described as one in which random chance predominated. And, of course, such could indeed be true, in certain situations – for example in an enclosed volume of mixed gases, you could derive both temperature and pressure by assuming a situation of random movements and collisions.

But, such required it to be a continuing and stable overall situation. If the gases were reacting with one another, and other substances were present, and reacting too, then the persistence of that stability might not reign very long.

As always, such an assumption was invariably a simplification and an idealisation of the given situation.

Now, such situations were frequently adhered to, as stabilities are the rule in most circumstances, so such ideas can be used with a measure of confidence, BUT, whenever any development began to occur, such a stance could never deliver any reasons why things behaved as they did.

In Sub Atomic Physics, for example, as investigations penetrated ever deeper into Reality, problems began to occur with increasing frequency. The discovery of the Quantum became the final straw!

Now, to return to the supposed “common ground”, this was interpreted as a complex, multi-factor situation, in which opposing contributions tended to "cancel out”, and the result was a situation best described as one in which random chance predominated. And, of course, such could indeed be true, in certain situations – for example in an enclosed volume of mixed gases, you could derive both temperature and pressure by assuming a situation of random movements and collisions.

But, such required it to be a continuing and stable overall situation. If the gases were reacting with one another, and other substances were present, and reacting too, then the persistence of that stability might not reign very long.

As always, such an assumption was invariably a simplification and an idealisation of the given situation.

Now, such situations were frequently adhered to, as stabilities are the rule in most circumstances, so such ideas can be used with a measure of confidence, BUT, whenever any development began to occur, such a stance could never deliver any reasons why things behaved as they did.

In Sub Atomic Physics, for example, as investigations penetrated ever deeper into Reality, problems began to occur with increasing frequency. The discovery of the Quantum became the final straw!

For, in that area, phenomena occurred which blew apart prior fundamental assumptions about the nature of Reality at that level, and, for example, what had always been considered to be fundamental particles, could seem to be acting like extended Waves. Yet, with the smallest of interventions these could immediately revert back to acting as particles again. These phenomena were deemed to be beyond explanation, and what was used instead of the usual explanations, was the mathematics of random chance, not merely about a given position, but significantly about the probabilities of it being in each of a comprehensive set of positions (presumably relating to a wave) when they reverted back to particles again.

The formalisms of waves were used in this very different and illegitimate context. At the same time, physical explanations were dumped completely. The only reliable means of predicting things was in the probability mathematics developed originally for Randomness.

Now, the question arises - what do the Copenhagenists think they are doing with this new position?

First, they have NO explanations as to why it happens the way that it does.

Second, they deal only in Forms – equations and relations! Do they consider these to be causes?

Well, maybe you have guessed their position. They have no adequate answers, and hide behind the known physically-existing random situations (as described earlier), and say that their equations have exactly the same validity as those. Not so! For, this researcher has managed to explain all the anomalies of the Double Slit Experiments merely by adding a universal substrate into the situation. You don’t (as the Copenhagenists insist) need their idealist “interpretation” at all.

But, nevertheless, a great deal more still remains to be done. If the real problem is the incorrect Principle of Plurality, and the only possible alternative is the Principle of Holism, then we are still a very long way from a comprehensive explanation of natural phenomena from this stance.

The whole edifice of current Science (and not just the current problems in Sub Atomic Physics) is undoubtedly based upon flawed assumptions - not only in how things are explained, but also in Method. It is exactly how we do all our experiments!

To change track will be an enormous undertaking, and a look at the current state of “a Holist Science”, reveals that almost nothing has yet been achieved. Honourable exceptions, such as work by Darwin, Wallace and Miller are simply not enough to fully re-establish Science upon a coherent, consistent and comprehensive basis, for that will be an enormous task. But, there is a way!

First, we carry on with the current pluralist methods, BUT no longer stick to the pluralist principle as correctly describing the nature of Reality. It becomes a pragmatic method only. We can use it both to reveal the contents of a situation and to actually organise productive use, BUT we must abandon the myth of eternal Natural Laws revealed by those methods. We must accept that what we can extract in pluralist experiments are NOT “Laws”, but actually approximations confined to the particular imposed conditions, in which such were extracted.

Second, we must begin to devise and implement a new paradigm for scientific experiments. Stanley Miller’s famous experiments into the early stages in the Origin of Life, and Yves Couder’s “Walkers” experiments, clearly show two valid routes.

But, most important of all, scientists must become competent Philosophers!

Pragmatism and positivist philosophical stances are just too damaging. A clear, consistent and developed Materialism must be returned to, but it can no longer be the old Mechanical version.

We must learn the lessons of Hegel, and actively seek the Dichotomous Pairs that indicate conclusively that we have gone astray, and thereafter, via his dialectical investigations into premises, recast our mistaken assumptions and principles to transcend our own limitations, at each and every guaranteed impasse.

The main problem in Holist Science is the fact of the many, mutually-affecting factors in literally all situations in Reality-as-is. Plurality validated the farming of experimental Domains to simplify such situations, and in doing so cut down the affecting contributions either to a single one, or to one that was evidently dominant. So, with repeated experiments with different target relations, such investigations delivered, NOT Reality-as-is, but a simplified and idealised set up, with a recognition of the factors involved, but with each one, in a particular Domain, presenting a version, which could also be replicated to allow effective use of the idealised form extracted. But, the whole series of different tailor-made Domains, each with one of the factors as target, also delivered a list of the factors involved.

Pragmatically, each could be used effectively to some required end, but the list, though each one was idealised, would as a full set, allow a theorist to attempt an explanation of what was seen in the unfettered and natural “Reality-as-is” Though achieved using pluralist methods, and hence distorting each factor, a Holist theoretician could use that list in a very different way.

It was, after all, what had been the situation in the past including BOTH views, that had been the usual way of doing things, before Wave/Particle Duality and the Copenhagen stance had brought that to an end.

Yet, of course, the new stance was not the same as before. For now, plurality was just a pragmatic trick, and the real Science was in Holist explanations.

The pluralist mistake of assuming eternal Natural Laws, which produced all phenomena merely by different summations, was no longer allowed. And the belief in Natural Laws was replaced by an acceptance that all extracted laws would not only be approximations for limited circumstances, but would also and inevitably at some point produce contradictory concepts, and that only by unearthing the premises that had produced these, and changing them for something better, would the produced impasse be transcended, and further theoretical development made

The Holist scientist did not deal in supposedly eternal Natural Laws, but factors that were indeed effectible by their contexts. So, this new breed made the investigation of how factors were indeed changed by their contexts, and were always prepared to directly address major impasses when they arose.

Now, there are different ways of doing this sort of Science. In this theorist’s re-design of Miller’s Experiment, he realised that the provision of inactive pathways within the apparatus would facilitate certain interactions and their sequencing. So, a series of versions of the experiment were devised with such appropriate inactive channels, which were also equipped with non-intrusive sensors, sampling on a time-based schedule. Then, on a particular run with a given internal channelling, data collected from the various phases, would be taken and could be analysed afterwards to attempt to discover certain contexts at different times.

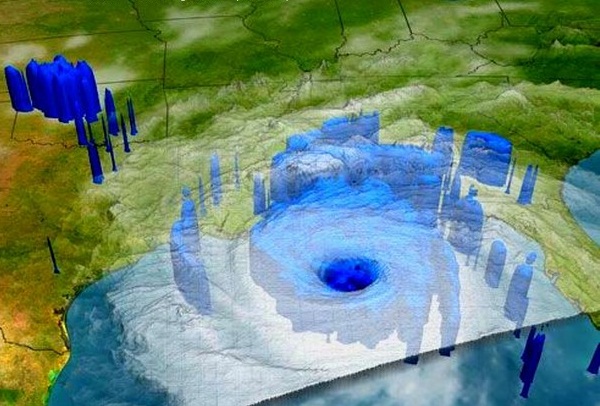

Something similar is currently done by Weather Forecasters in their simulation models linked to large numbers of sensors at weather stations, but the crucial difference in the New Miller’s Experiment was in the Control that was in the hands of the experimenters. That was never available to the weather Forecaster; he had to take what he was given, but the new holistic experiments allowed something greatly superior to their Thresholds, when one law was sidelined to bring in another. The Weather simulations with thresholds were a crude invention to attempt to deal with a real Holist situation, pluralistically.

In the described Miller-type experiments the investigation would be constantly re-designed, both in the inactive structures provided. And in the necessary kinds of sensors to maximise the information and allow progress to be made. Clearly such control is never available to weather forecasters.

In the described Miller-type experiments the investigation would be constantly re-designed, both in the inactive structures provided. And in the necessary kinds of sensors to maximise the information and allow progress to be made. Clearly such control is never available to weather forecasters.

No comments:

Post a Comment